问题描述

今天下午在hive中创建普通表的时候,死活创建不成功,但是可以创建数据库,后来发现也可以创建外部表。报错信息如下:

hive (testdb)> CREATE TABLE compress_test(

> cdn string,

> region string,

> level string,

> time string,

> ip string,

> domain string,

> url string,

> traffic bigint)

> ROW FORMAT DELIMITED

> FIELDS TERMINATED BY '\t' ;

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:Got exception: java.net.ConnectException Call From hadoop001/192.168.100.111 to localhost:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused)

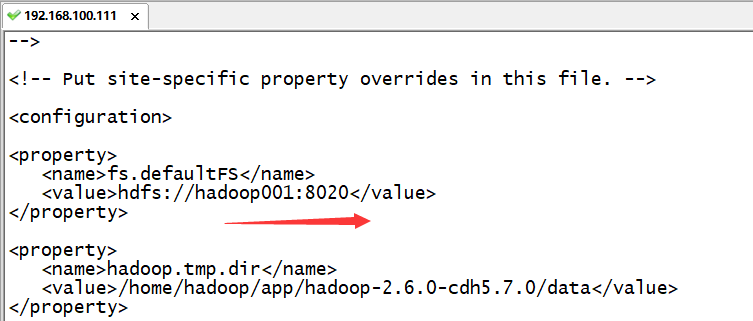

根据错误提示,说是hadoop RPC通信端口9000有问题,查看了hadoop配置文件core-site.xml,里面的通信端口是配置的8020。如下图:

始终不明白为啥会报这样的错误?后来重新创建了一个数据库haha,居然创建成功了。

hive (testdb)> create database haha;

OK

Time taken: 0.069 seconds

hive (testdb)>

用haha数据库可以创建表

hive (testdb)>

>

>

> use haha;

OK

Time taken: 0.019 seconds

hive (haha)>

hive (haha)> CREATE TABLE compress_test(

> cdn string,

> region string,

> level string,

> time string,

> ip string,

> domain string,

> url string,

> traffic bigint)

> ROW FORMAT DELIMITED

> FIELDS TERMINATED BY '\t' ;

OK

Time taken: 0.065 seconds

查看MySQL中的元数据信息

首先查看元数据在mysql中哪个库下,用户名和密码

[hadoop@hadoop001 conf]$ cat hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive?createDatabaseIfNotExist=true&characterEncoding=UTF-8</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

进入到hive数据库中

查看tbls 和 dbs 表

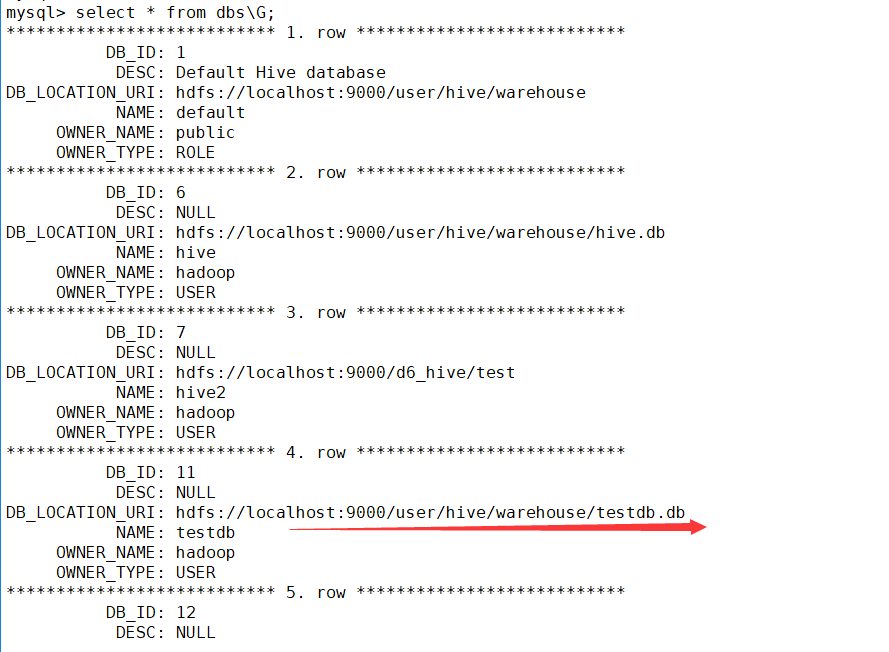

mysql> select * from dbs\G;

*************************** 1. row ***************************

DB_ID: 1

DESC: Default Hive database

DB_LOCATION_URI: hdfs://localhost:9000/user/hive/warehouse

NAME: default

OWNER_NAME: public

OWNER_TYPE: ROLE

*************************** 2. row ***************************

DB_ID: 6

DESC: NULL

DB_LOCATION_URI: hdfs://localhost:9000/user/hive/warehouse/hive.db

NAME: hive

OWNER_NAME: hadoop

OWNER_TYPE: USER

*************************** 3. row ***************************

DB_ID: 7

DESC: NULL

DB_LOCATION_URI: hdfs://localhost:9000/d6_hive/test

NAME: hive2

OWNER_NAME: hadoop

OWNER_TYPE: USER

*************************** 4. row ***************************

DB_ID: 11

DESC: NULL

DB_LOCATION_URI: hdfs://localhost:9000/user/hive/warehouse/testdb.db

NAME: testdb

OWNER_NAME: hadoop

OWNER_TYPE: USER

发现DB_LOCATION_URI: hdfs://localhost:9000

这里始终不明白为啥RPC通信端口是9000?不是8020?

于是乎又切换到hive 的testdb库下,继续操作,这次换了一条SQL,即创建外部表的SQL,发现可以创建外部表。

hive (testdb)> CREATE EXTERNAL TABLE compress_test(

> cdn string,

> region string,

> level string,

> time string,

> ip string,

> domain string,

> url string,

> traffic bigint)

> ROW FORMAT DELIMITED

> FIELDS TERMINATED BY '\t'

> location '/hadoop/compress/' ;

OK

Time taken: 0.093 seconds

再次查看mysql中的元数据信息

mysql> select * from dbs\G;

*************************** 1. row ***************************

DB_ID: 1

DESC: Default Hive database

DB_LOCATION_URI: hdfs://localhost:9000/user/hive/warehouse

NAME: default

OWNER_NAME: public

OWNER_TYPE: ROLE

*************************** 2. row ***************************

DB_ID: 6

DESC: NULL

DB_LOCATION_URI: hdfs://localhost:9000/user/hive/warehouse/hive.db

NAME: hive

OWNER_NAME: hadoop

OWNER_TYPE: USER

*************************** 3. row ***************************

DB_ID: 7

DESC: NULL

DB_LOCATION_URI: hdfs://localhost:9000/d6_hive/test

NAME: hive2

OWNER_NAME: hadoop

OWNER_TYPE: USER

*************************** 4. row ***************************

DB_ID: 11

DESC: NULL

DB_LOCATION_URI: hdfs://localhost:9000/user/hive/warehouse/testdb.db

NAME: testdb

OWNER_NAME: hadoop

OWNER_TYPE: USER

结果发现RPC通信端口还是9000和原来是一样的。

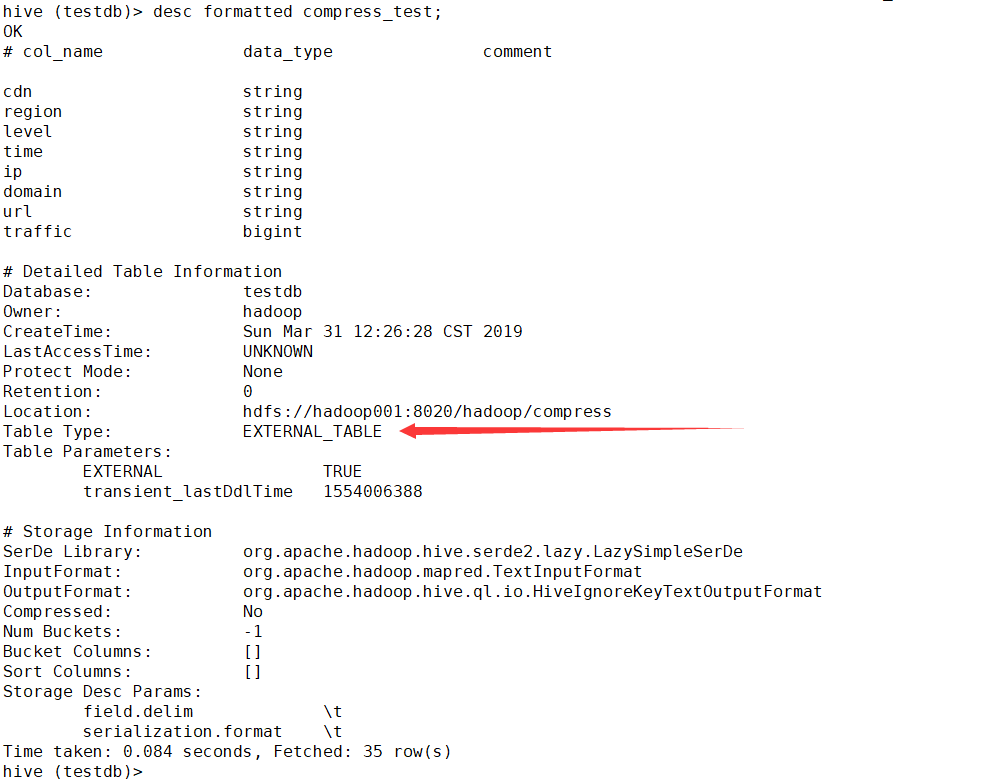

#### 查看外部表的表结构

发现外部表指向的HDFS目录的PRC通信端口是9000

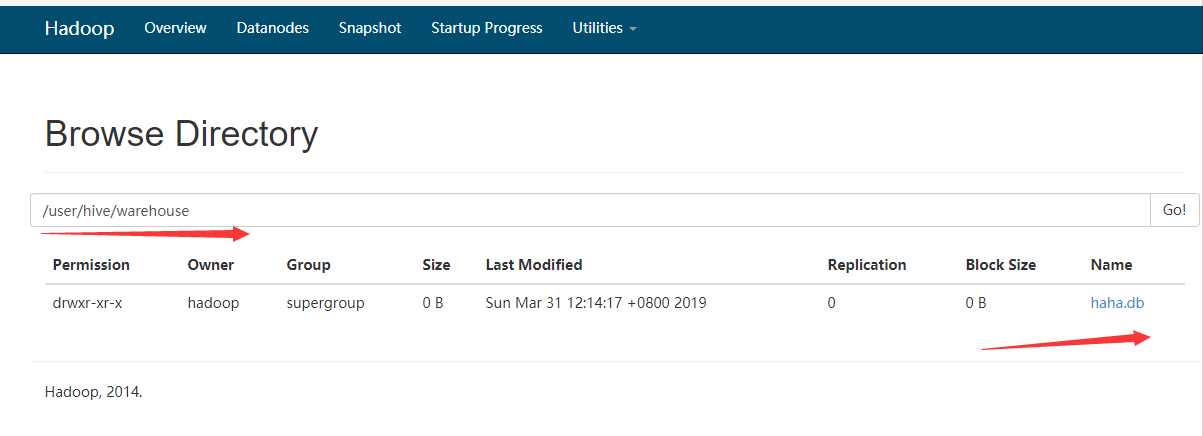

#### 查看hive在hdfs存放在hdfs中的信息

发现只有刚才新创建的数据库信息,并没有其他的数据库信息

瞬间发现,以前的那些hive数据库的信息是在Hadoop PRC 9000中创建,后来修改了端口。所以一直报错是9000端口有异常,连接拒绝。

### 如何同步以前的数据

首先在hdfs中创建缺失的目录

[hadoop@hadoop001 hadoop]$ hdfs dfs -mkdir -p /user/hive/warehouse/testdb.db

[hadoop@hadoop001 hadoop]$

然后刷新mysql中的元数据信息

更新dbs表

mysql> update dbs set DB_LOCATION_URI='hdfs://hadoop001:8020/user/hive/warehouse/testdb.db' where DB_ID=11;

Query OK, 1 row affected (0.01 sec)

Rows matched: 1 Changed: 1 Warnings: 0

查看mysql中的元数据信息

mysql> select * from dbs\G;

*************************** 1. row ***************************

.

.

.

*************************** 4. row ***************************

DB_ID: 11

DESC: NULL

DB_LOCATION_URI: hdfs://hadoop001:8020/user/hive/warehouse/testdb.db

NAME: testdb

OWNER_NAME: hadoop

OWNER_TYPE: USER

在hive的testdb下创建数据库

hive (testdb)> create table student

> (id int,name string);

OK

Time taken: 0.072 seconds

查看表结构

hive (testdb)> desc formatted student;

OK

# col_name data_type comment

id int

name string

# Detailed Table Information

Database: testdb

Owner: hadoop

CreateTime: Sun Mar 31 12:56:58 CST 2019

LastAccessTime: UNKNOWN

Protect Mode: None

Retention: 0

Location: hdfs://hadoop001:8020/user/hive/warehouse/testdb.db/student

Table Type: MANAGED_TABLE

注意:在RPC9000端口下,创建的数据库,表,插入的数据,只用更新元数据的dbs表就行。同理,其他的按照相同的办法处理